← Dev and technical SEO portfolio

seo-tools: a CLI for SEO audits

Open source SEO. Crawl sites for broken links, check GSC for top queries, perform network analysis, and power content audits. All Python: easily extended and automated in any environment.

What does seo-tools do?

-

Checks links on large sites

Crawls sites for broken links, redirects, and other responses based on a seed URL—including parsing sitemap.xml files.

-

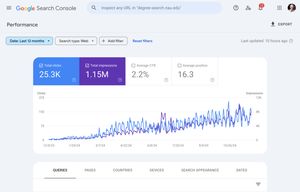

Calls GSC API for queries audits

Google Search Console is a powerful tool for auditing your sites —but using its API can present a barrier for non-technical auditors who want more granular data.

-

Crawls and scrapes page content

Log archival content, audit exiting material, identify hidden page content, and more.

-

Outputs to CSV, Markdown, and HTML

CSV is an ideal format to quickly pass data into other scripts or tools. For non-tabular data, like page content, seo-tools also supports exporting to Markdown and HTML formats.

How do I use seo-tools to improve my sites?

I am not interested in growth at the expense of my users. seo-tools takes the same approach. Features are focused on helping improve content strategy and user experience, while better understanding how users want to consume content.

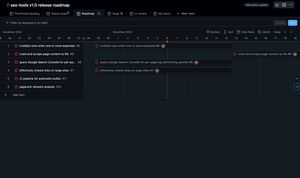

Up next on seo-tools roadmap

seo-tools is pre-release software. Track progress toward the seo-tools v1.0 release milestone.

-

Network analysis and visualization

Currently testing a feature to automate network analysis of "pagerank" and centrality, and to export interactive network visualizations of site architecture based on link relationships.

-

Integrate with SEO requests portal web app

I aim to integrate this project into my SEO request portal as a module to power automated requests.

About the stack

All Python

Distributing this application as a Python package also allows for easy installation, including dependencies, using Python's pip package manager and virtual environments, while also allowing me to use the underlying functionality as modules in other projects.

On-disk SQLite

Keeping the SQLite database on-disk, the full output links status crawls is available for further analysis using relational queries even after audits are completed. And if something fails during a crawl, all data is preserved for further inspection.

HTTP requests

Python requests packages allow for intelligent parsing of HTTP responses, supporting features that avoid downloading large documents or following long redirect chains.

networkx

The networkx package provides a wide range of APIs to enable complex analysis of a large number of nodes. This package supports in-progress features including "pagerank" analysis, network centrality, and grouping.

Cloud & VPS friendly

This project can easily be run anywhere—on remote servers, on cloud infrastructure, or in a container swarm. Audits can run on premises or behind a VPN, and can be automated with a simple cron job.