Visualizing topic networks to strategize for pillar-based marketing

February 2024

About the stack

- Python

- Web scraping

- html_requests

- networkx

- pyviz

- HTML

This project uses python libraries for web scraping, network graphing, and network visualization to parse Google Search HTML and produce network visualizations.

Thinking beyond "keyword difficulty" and traditional backlinks to embrace topical expertise

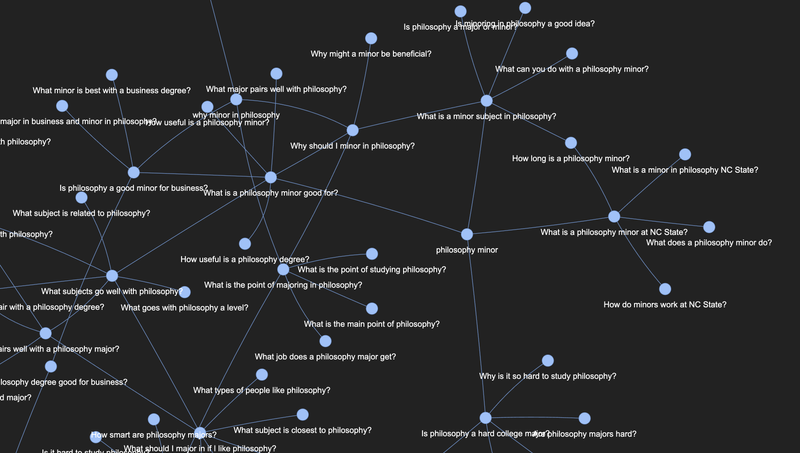

As SEOs, we can adapt robust network analysis frameworks (like the popular networkx python library) used by data scientists to explore connections between topics.

By scraping Google’s “People also ask” feature based on seed keywords, we can create, analyze, cluster, and map complex networks of related topics and questions to inform content strategy.

Why use “People also ask” for keyword research at all?

Traditional keyword research and rank tracking has its place—but it’s also important to remember that this discipline comes from humble origins in the “Google AdWords” platform. Long-tail keywords are often ignored by keyword research tools or have so little data available that it can be difficult to identify their value. However, using features like “People also ask” can help you identify the breadth of topics Google expects users might wish to explore within a given pillar, helping you to anticipate user needs, rank for their searches, and distingish yourself as a topical authority to your organic users.

How do I get started with web scraping for “People also ask” lists?

Many technologies allow you to submit automated HTTP requests, parse responses, and pull out data. Even more, some technologies (like the python html_requests library) allow you to automate a browser to simulate real users. Building a simple queue, automating browser requests, and parsing for select data allows us to extract exactly the search queries we’re looking for.

Import the required libraries

In this case, we’ll be using the HTMLSession module from the requests_html library, a few modules from the urllib library, and the queue, pyvis, and networkx libraries. Remember to install these libraries using pip if you haven’t already installed them.

from requests_html import HTMLSession

from urllib.parse import urlparse, parse_qs, urlencode

from queue import Queue

from pyvis.network import Network

import networkx as nx

Create a function just to scrape Google for “People also ask” queries

def get_people_also_ask_options(query):

with HTMLSession() as session:

r = session.get(f'https://google.com/search?{ urlencode({"q": query}) }')

r.html.render(sleep=5)

found_questions = []

try:

for link in r.html.find("div", containing="People also ask")[3].links: ## THIS IS A LIKELY BREAK POINT. I DON'T KNOW THAT THEY'LL KEEP THE PARENT AT THIS LEVEL FOREVER.

if "google.com" in link:

try:

question = {

'query': parse_qs(urlparse(link).query)['q'][0],

'source query': query,

'hostname': urlparse(link).hostname,

}

found_questions.append(question)

except:

pass

except:

print(r.html.html)

return(found_questions)

Add supporting functions

We’re going to add a few additional functions to count total scrapes (so we can add a limit), create network graphs with networkx, and visualize those network graphs with pyviz.

scrape_count = 0

def global_scrape_count():

global scrape_count

scrape_count += 1

return scrape_count

def create_network_graph(log, list):

G = nx.MultiDiGraph()

G.add_nodes_from(list)

G.add_edges_from([(i['source query'], i['query']) for i in log])

return G

def visualize_network_graph(graph):

net = Network(height="100vh", width="100vw", bgcolor="#222222", font_color="white")

net.from_nx(graph)

net.save_graph("networkx-pyvis.html")

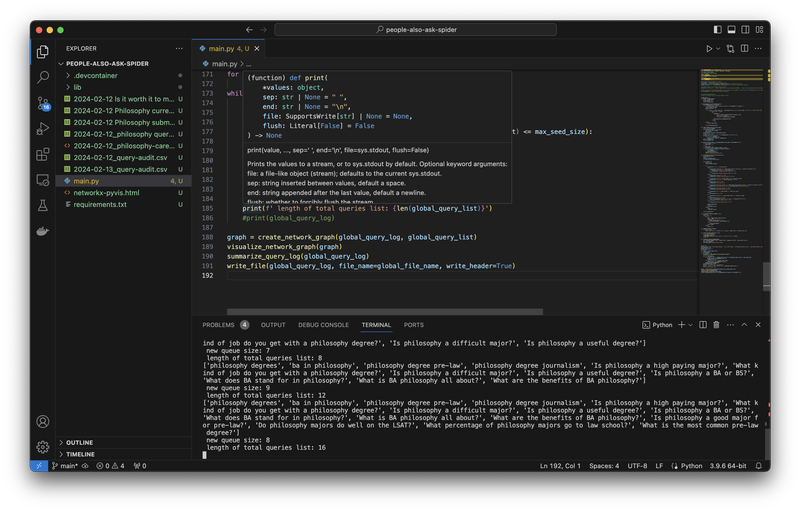

Once all that’s done, you can pull the whole thing together with a single script

In this case, I named my script main.py so you could run the scrape by running python3 main.py in any terminal emulator where you’ve installed the dependencies.

global_query_list = ['philosophy degrees', 'ba in philosophy', 'philosophy degree pre-law', 'philosophy degree journalism']

max_seed_size = 10

global_query_log = []

q = Queue()

for query in global_query_list:

q.put(query)

while q.qsize() > 0:

new_queries = get_people_also_ask_options(q.get())

#print(new_queries)

for i in new_queries:

if (i['query'] not in global_query_list) and (len(global_query_list) <= max_seed_size):

global_query_list.append(i['query'])

q.put(i['query'])

elif (i['query'] not in global_query_list):

global_query_list.append(i['query'])

global_query_log.append(i)

print(global_query_list)

print(f' new queue size: {q.qsize()}')

print(f' length of total queries list: {len(global_query_list)}')

#print(global_query_log)

graph = create_network_graph(global_query_log, global_query_list)

visualize_network_graph(graph)

summarize_query_log(global_query_log)

write_file(global_query_log, file_name=global_file_name, write_header=True)