Always-on SEO data requests portal

November 2023

About the stack

- Python

- Flask

- Google Search Console

- chart.js

- Bootstrap

- MariaDB

- Docker

I chose to create an SEO requests portal using python because it’s the language I was already using to analyze data for incoming requests. As I continue rolling out new features to this portal, it allows me to spend less time responding to mundane data requests and more time working on new analysis.

Automation helps small teams stay on top of requests and make time for analysis

This SEO requests portal automates many of the common requests I receive, including pulling top queries per site or generating a list of all pages and documents for a migration, to free up my time for analysis and new opportunities.

Because I am a solo SEO in a large organization, my biggest limiter is often time. In response, I started building a web-based requests portal that I can update frequently whenever new automation-friendly tasks come up.

Why go through so much effort to create an SEO requests portal?

As I discussed in my post about using Grafana to visualize Google Search Console data, there are easier paths and harder paths for sharing search-related data to your clients and stakeholders. This is admitedly one of the harder paths. However, because I am already doing a significant portion of my analysis in python, this requests portal is a logical extension to my capacity. Instead of receiving requests and piping request info into python scripts on my local machine, the requests portal provides a user-friendly interface for my clients and peers to submit requests and receive results quickly.

Use cases of an always-on SEO requests portal

Reporting on top-performing queries for a set of pages

Many of my content writers and editors want to know which queries are currently performing well on their pages. I could easily generate a report of these queries using Google Search Console, and I can automate it easily using any programming language I like, but it still requires me to do the analysis and send it back. The SEO requests portal makes it easier in either case:

- I have some early adopters who feel comfortable submitting requests to the portal themselves. This saves me 100% of the time I would’ve spent on that request.

- For requesters who don’t feel comfortable, my reports are still automated so I can generate custom reports specific to my organization from anywhere—even my phone—in only a few minutes.

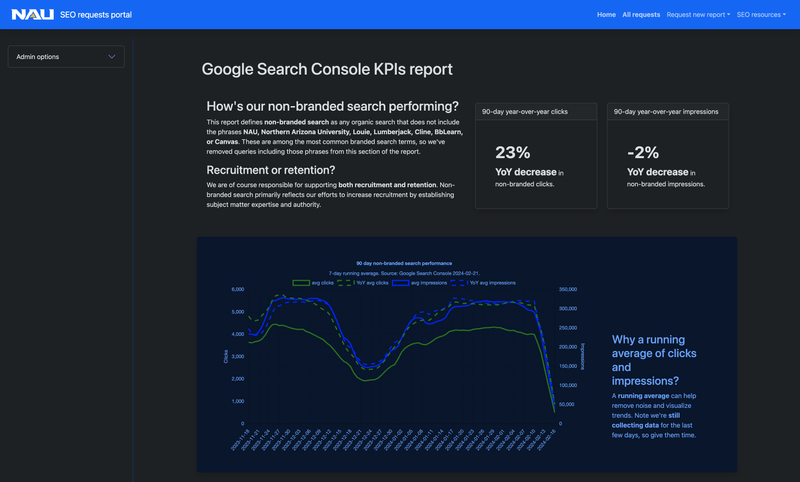

Narrative dashboarding for Google Search Console and other data

As I mentioned above, this is not as easy to implement as using a third-party tool to generate dashboards. However, with the extra effort comes unlimited flexibility. Creating a fully custom reporting application gives me the flexibility to create automated narrative reports that are always up-to-date and provide great value to non-technical stakeholders. Always good to remember this level of automation comes with costs: time and development expertise.

For this project, I use Flask’s simple design and hypermedia-friendly approach to create unique and data-driven narrative reports. Data is pulled from Google Search Console, cached on the server, and either passed to a charting library on the client (chart.js) or rendered on the server side and passed to the client as hypermedia (HTML).

For SEOs with strong web development and technical analysis skills, I’d highly recommend considering your own custom reporting site. If you’d prefer to stick to out-of-the-box solutions, tools like Looker Studio (from Google) provide a lower entrypoint to creating these types of dashboards but without all the flexibility of building your own.

Generating CMS-specific reports of all pages, posts, and documents on a given site

At Northern Arizona University, one of my main projects involves providing support for large site migrations. As with any migration, this process begins with holistic analysis and recommendations based on the content currently on the site. To speed the process, I use the native APIs from our CMS (in this case, WordPress) to generate reports on all the pages, titles, meta tags, and other important fields in a migration. Through the SEO requests portal, I can offload this effort and automate the reports we generate.

We use this report as the base for a variety of deliverables:

- I use the report for keyword mapping excercises—proposing updated titles, meta descriptions, and other optimizations inline with the site’s current state.

- UX designers use this report as a base for information architecture visualizations that help clients, copywriters, and web publishers understand the ideal end state for a migrated site.

- Clients use this report, with some additional traffic data, to determine which pages they feel continue bringing value to their site—and which pages do not.

- Copywriters and web publishers use this report to develop a to-do list of all the pages that need migrated, reworked, and redirected (if applicable).

Project images

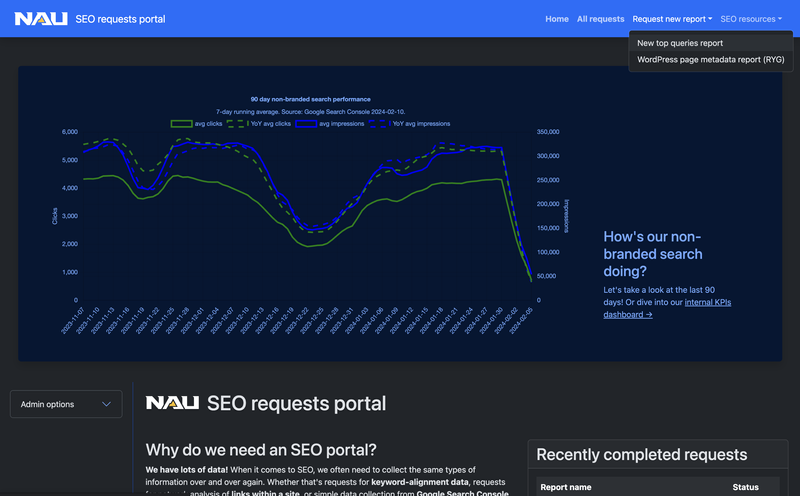

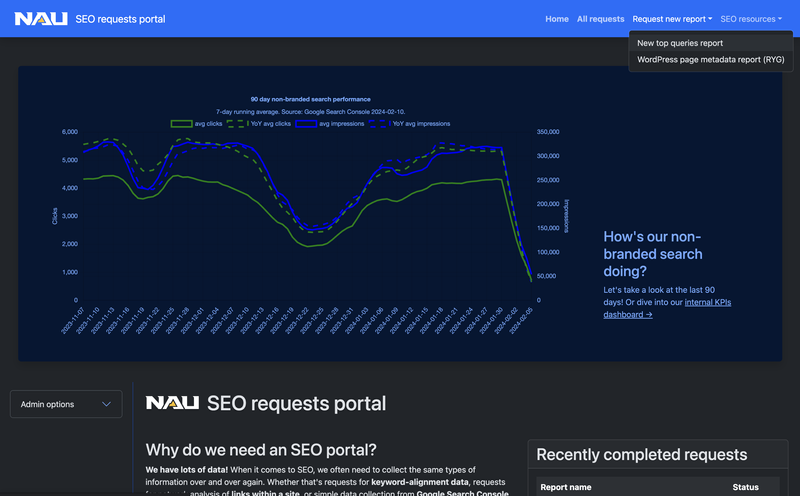

Screenshot of the NAU SEO requests portal homepage, featuring data pulled live from Google Search Console and cached on the server.

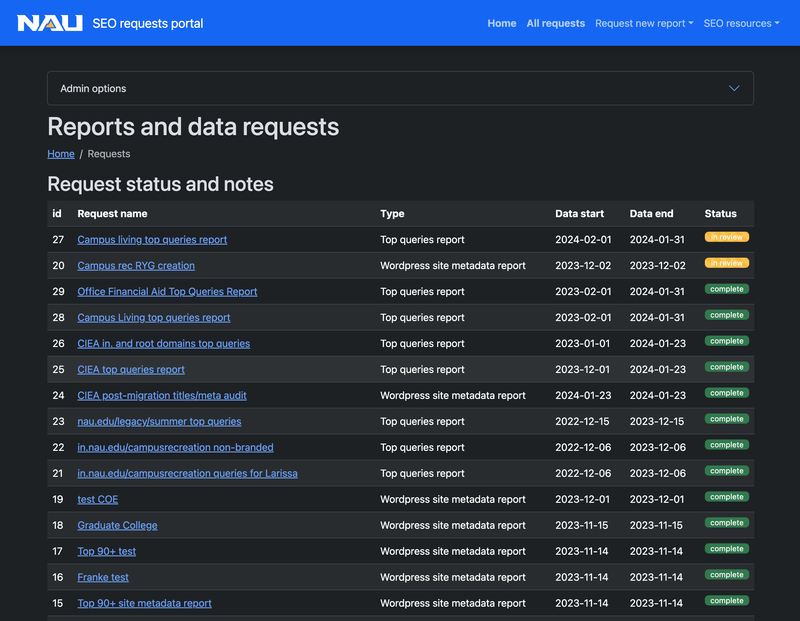

Screenshot of the NAU SEO requests portal "all requests" page, where users can go to find previous data and check the status of their requests.

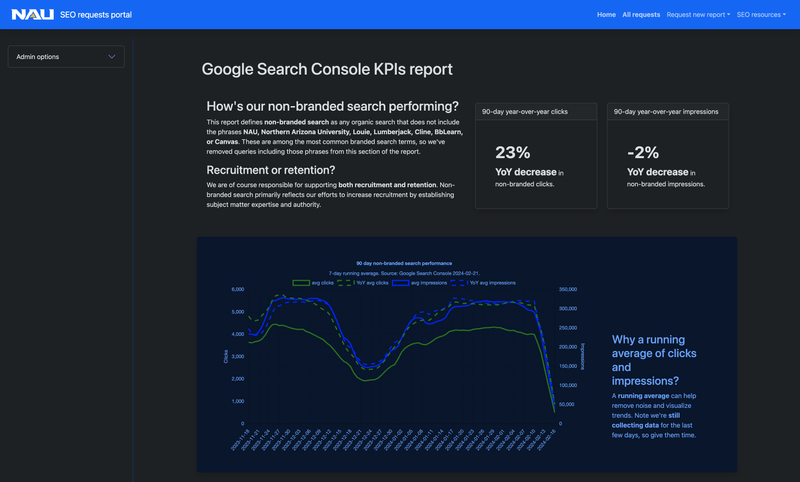

Screenshot of the NAU SEO requests portal "Google Search Console KPIs" report, highlighting non-branded search performance over 90 days.